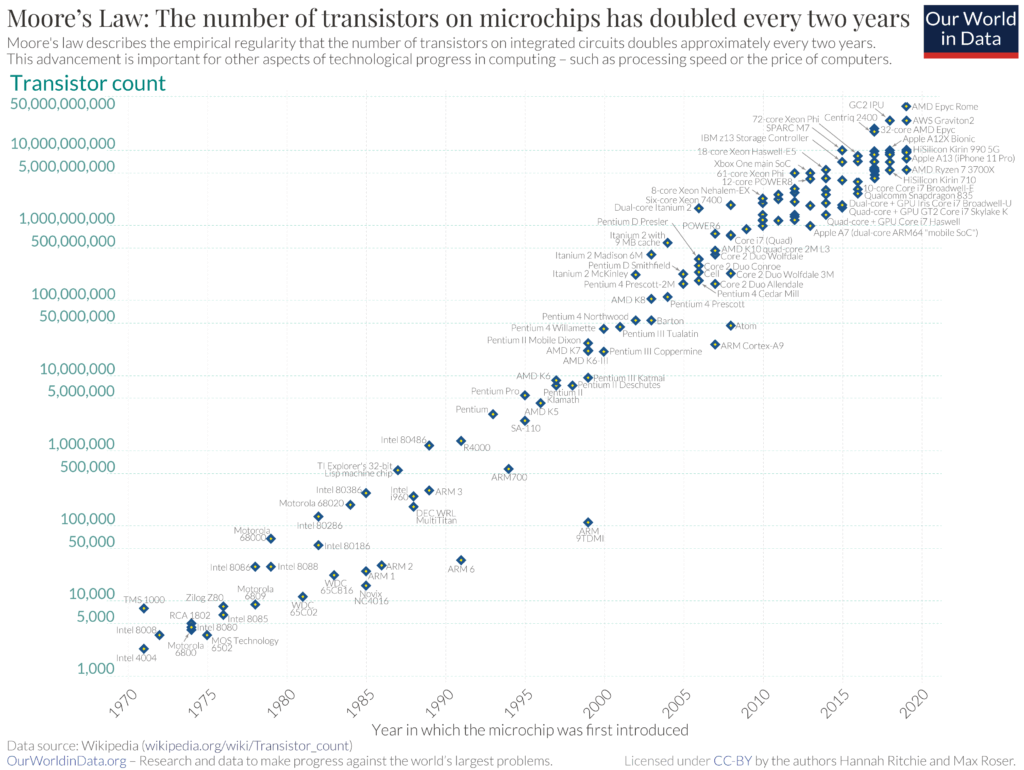

Traditional silicon-based computing, for decades governed by Moore’s Law, is reaching its physical limits.

By John Heilprin

August 7, 2025

We’re at a turning point in technology. This isn’t a dead end for computing, but a catalyst for a new wave of methods like quantum, optical, and neuromorphic computing. The driving force behind this transition is the critical need for energy efficiency. While traditional technologies raise concerns about power consumption, these new systems offer a path to a more sustainable future.

Research labs are at the forefront of this shift. The ETH Zurich-PSI Quantum Computing Hub, a joint effort with the Paul Scherrer Institute, is developing quantum computers based on ion traps and superconducting components. Quantum computing uses qubits and leverages phenomena like superposition and entanglement to perform complex calculations exponentially faster for specific problems.

Fujitsu also is developing a 10,000+ qubit quantum computer with a target completion date of 2030, and Amazon Braket has added new quantum processors from companies like IQM to its cloud service, making them more accessible to researchers and businesses.

Optical and neuromorphic computing, however, are at a very different stage of development compared to quantum computing, which is seeing more significant commercial investment and cloud-based services.

The GESDA Science Breakthrough Radar® projects that meaningful applications for this technology will emerge within the next five years, impacting fields such as materials science, chemistry, and pharmaceuticals. In a different approach, optical computing uses light instead of electrical currents to process information.

A major challenge for optical computing has been the lack of a suitable memory component. A recent study published in Nature Photonics showcased a new method for photonic “in-memory computing.” The breakthrough created memory cells that combine key attributes like high speed, low energy, and non-volatility in a single platform, moving optical computing from a theoretical concept to a more practical reality.

Inspired by the human brain, neuromorphic computing mimics biological processes for greater efficiency. British computer scientist Adam Adamatzky even explores using organisms like fungi as a form of “slow computer” for environmental sensing.

A company called FinalSpark has developed a remote platform that allows researchers to run experiments on living neurons that they call “wetware computing.” This platform aims to harness the incredible energy efficiency of biological neurons for computation, with potential energy savings of over a million times compared to digital processors. This adds a compelling and almost sci-fi-like element to the discussion of unconventional computing.

University of Manchester researchers developed nanofluidic memristors that can mimic the memory functions of the human brain, exhibiting both short-term and long-term memory, providing a significant step toward creating devices that learn and adapt in a truly brain-like fashion.

Practical applications and global impact

These new technologies are paving the way for real-world applications. An article published in Nature on August 4 demonstrates that 2D materials are a foundational technology, allowing for in-sensor computing.

The approach combines sensing, memory, and processing on a single platform, enabling smarter, more responsive technologies where computing is seamlessly integrated into the sensors themselves.

These advancements could lead to breakthroughs in healthcare, such as a portable neuromorphic device for rapid disease diagnosis, or quantum computers that accelerate drug discovery by simulating molecular interactions at unprecedented speeds. In agriculture, farmers could use sensors with built-in computing to optimize crop yields.

A recent trend is the development of neuromorphic chips that can perform real-time, on-the-fly learning in devices, such as robots or sensors, with ultra-low power consumption, making smart devices more autonomous and reducing the need for constant cloud connectivity

The potential to solve complex problems is vast, as highlighted by NASA’s “Beyond the Algorithm Challenge,” which sought innovative solutions using unconventional computing for Earth science problems like rapid flood analysis. Ultimately, these developments could lead to breakthroughs in artificial intelligence that change our daily lives.

Ethical and security challenges

The technological shift also brings challenges. The power of quantum computing could render today’s standard encryption obsolete, demanding a proactive effort to develop new cryptographic methods.

A recent paper published on arXiv specifically analyzes and compares the security risks of traditional sensor systems with emerging in-sensor computing systems, highlighting new potential attack scenarios and the need for new security frameworks.

There is also the potential for a wider digital divide, as access to these advanced technologies could be unevenly distributed. To navigate these complexities responsibly, a collaborative effort is essential.

New partnerships among governments, academic institutions, and private companies are needed to develop robust security frameworks and ensure the benefits of this new era are widely accessible. The ultimate goal is to build a future where these powerful innovations contribute to solving global challenges in a responsible way.

Where the science and diplomacy can take us

The 2024 GESDA Science Breakthrough Radar®, distilling the insights of 2,100 scientists from 87 countries, anticipates several upcoming breakthroughs in computing. Key Radar references:

→

→

→

→

→

→

→

→

→

→